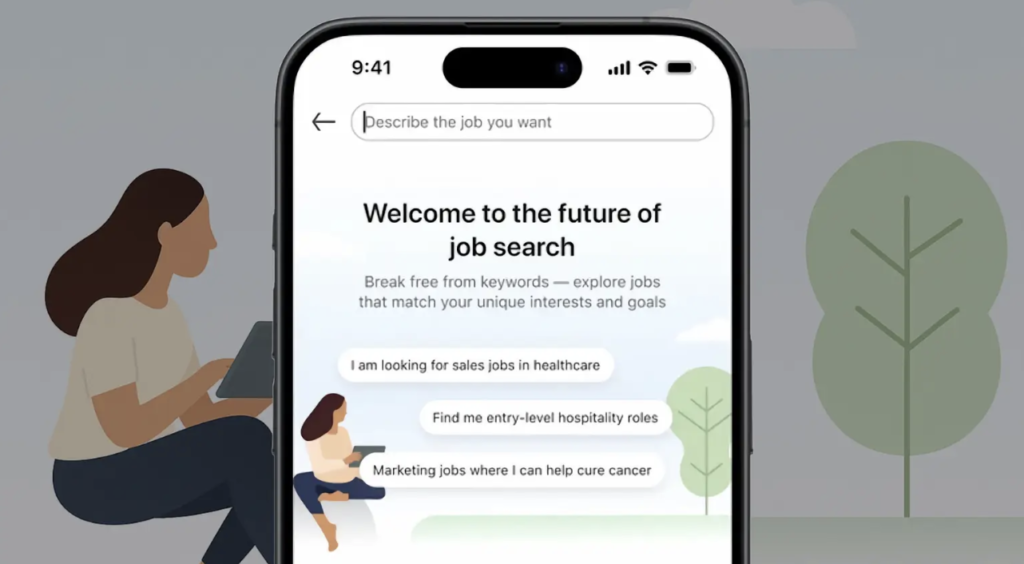

LinkedIn has unveiled a transformative update to its job search functionality, phasing out traditional keyword-based searches in favour of an advanced AI-driven system. This shift promises to deliver more precise job matches by leveraging natural language processing, fundamentally changing how job seekers and employers connect.

AI-Powered Job Matching

Gone are the days of rigid keyword searches. LinkedIn’s new AI system dives deeper into job descriptions, candidate profiles, and skill sets to provide highly relevant matches. According to Rohan Rajiv, LinkedIn’s Product Manager, the platform now interprets natural language queries with greater sophistication, enabling users to search with conversational phrases rather than specific job titles or skills.

For instance, job seekers can now input queries like “remote software engineering roles in fintech” or “creative marketing jobs in sustainable fashion” and receive tailored results. This intuitive approach eliminates the need to guess exact keywords, making the process more accessible and efficient.

Enhanced Features for Job Seekers

The update introduces several user-centric features designed to streamline the job search experience:

- Conversational Search: Users can describe their desired role in natural language, and the AI will interpret and match based on context, skills, and preferences.

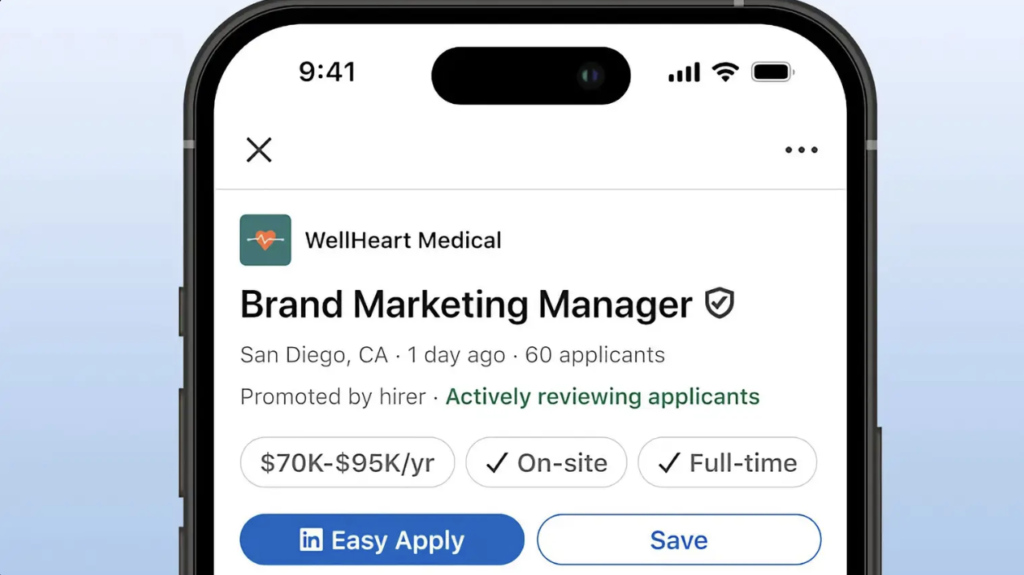

- Application Transparency: LinkedIn now displays indicators when a company is actively reviewing applications, helping candidates prioritise opportunities with higher response potential.

- Premium Perks: Premium subscribers gain access to AI-powered tools, including interview preparation, mock Q&A sessions, and personalised presentation tips to boost confidence and performance.

A New Era of Job Search Philosophy

LinkedIn’s overhaul reflects a broader mission to redefine job searching. With job seekers outpacing available roles, mass applications have overwhelmed recruiters. The platform’s AI aims to cut through the noise by guiding candidates toward roles that align closely with their skills and aspirations, fostering quality over quantity.

“AI isn’t a magic fix for employment challenges, but it’s a step toward smarter, more meaningful connections,” Rajiv said. By focusing on precision matching, LinkedIn hopes to reduce application fatigue and improve outcomes for both candidates and employers.

Global Rollout and Future Plans

Currently, the AI-driven job search is available only in English, but LinkedIn has ambitious plans to expand to additional languages and markets. The company is also exploring further AI integrations to enhance profile optimisation and career coaching features.

This update marks a significant leap toward a more intelligent, user-friendly job search ecosystem, positioning LinkedIn as a leader in leveraging AI to bridge the gap between talent and opportunity.